Pytorch教程内置模型源码实现

翻译自

https://pytorch.org/docs/stable/torchvision/models.html

主要讲解了torchvision.models的使用

torchvision.models

torchvision.models中包含了如下模型

- AlexNet

- VGG

- ResNet

- SqueezeNet

- DenseNet

- Inception v3

随机初始化模型

import torchvision.models as models resnet18 = models.resnet18() alexnet = models.alexnet() vgg16 = models.vgg16() squeezenet = models.squeezenet1_0() desnet = models.densenet161() inception =models.inception_v3()

使用预训练好的参数

pytorch提供了预训练的模型,使用torch.utils.model_zoo ,通过让参数pretrained =True来构建训练好的模型

方法如下

resnet18 = models.resnet18(pretrained=True) alexnet = models.alexnet(pretrained=True) squeezenet = models.squeezenet1_0(pretrained=True) vgg16 = models.vgg16(pretrained=True) densenet = models.densenet161(pretrained=True) inception = models.inception_v3(pretrained=True)

实例化一个预训练好的模型会自动下载权重到缓存目录,这个权重存储路径可以通过环境变量TORCH_MODEL_ZOO来指定,详细的参考torch.utils.model_zoo.load_url() 这个函数

有的模型试验了不同的训练和评估,例如batch normalization。使用model.train()和model.eval()来转换,查看train() or eval() 来了解更多细节

所有的预训练网络希望使用相同的方式进行归一化,例如图片是mini-batch形式的3通道RGB图片(3HW),H和W最少是244,。 图像必须加载到[0,1]范围内,然后使用均值=[0.485,0.456,0.406]和std =[0.229, 0.224, 0.225]进行归一化。

您可以使用以下转换来normalzie:

normalize = trainform.Normalize9mean = [0.485,0.456,0.406],std = [0.229,0.224,0.225])

在这里我们可以找到一个在Imagenet上的这样的例子

https://github.com/pytorch/examples/blob/42e5b996718797e45c46a25c55b031e6768f8440/imagenet/main.py#L89-L101

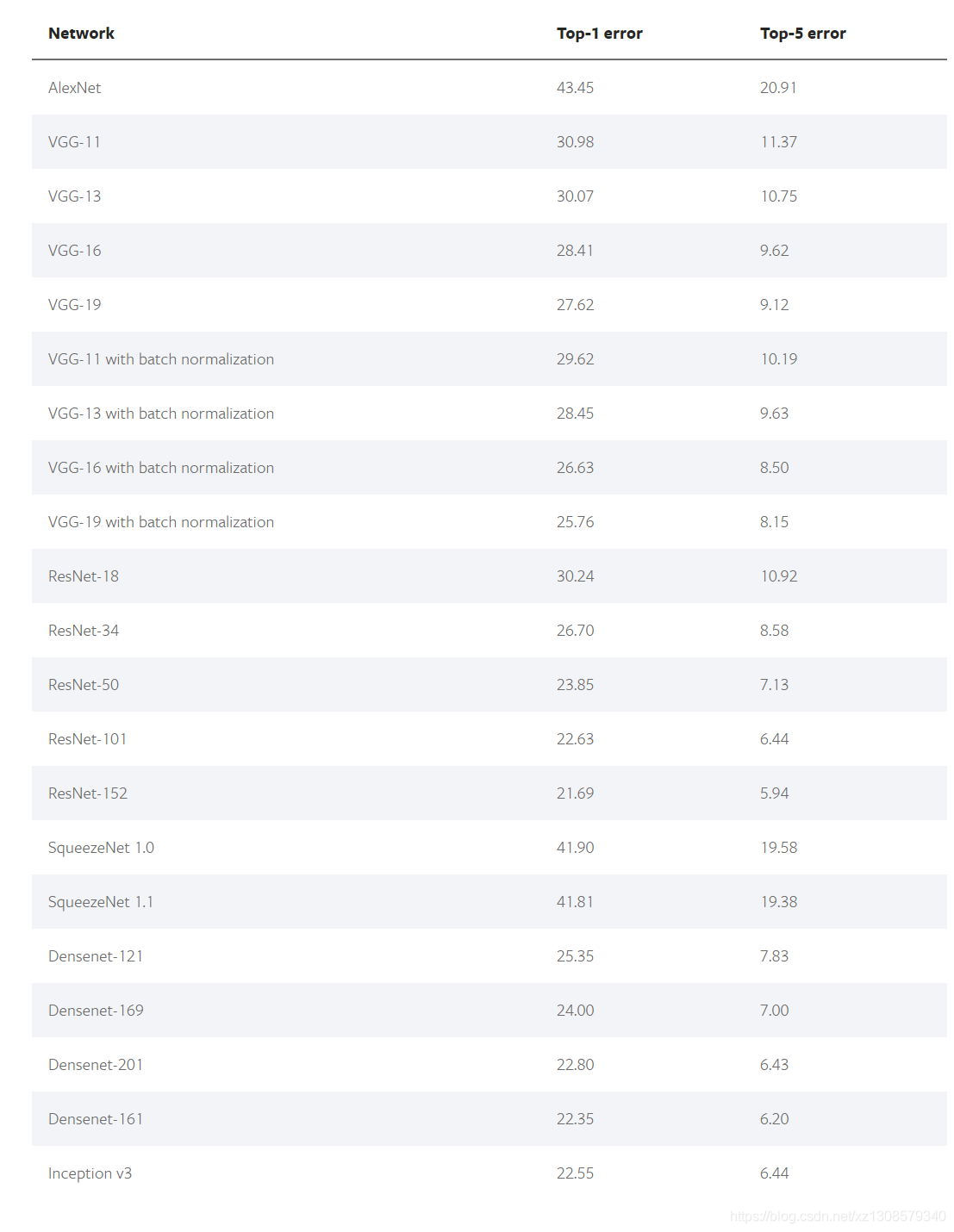

目前这些模型的效果如下

下面是模型源码的具体实现,具体实现大家可以阅读源码

###ALEXNET torchvision.models.alexnet(pretrained=False, **kwargs)[SOURCE] AlexNet model architecture from the “One weird trick…” paper. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet ###VGG torchvision.models.vgg11(pretrained=False, **kwargs)[SOURCE] VGG 11-layer model (configuration “A”) Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg11_bn(pretrained=False, **kwargs)[SOURCE] VGG 11-layer model (configuration “A”) with batch normalization Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg13(pretrained=False, **kwargs)[SOURCE] VGG 13-layer model (configuration “B”) Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg13_bn(pretrained=False, **kwargs)[SOURCE] VGG 13-layer model (configuration “B”) with batch normalization Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg16(pretrained=False, **kwargs)[SOURCE] VGG 16-layer model (configuration “D”) Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg16_bn(pretrained=False, **kwargs)[SOURCE] VGG 16-layer model (configuration “D”) with batch normalization Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg19(pretrained=False, **kwargs)[SOURCE] VGG 19-layer model (configuration “E”) Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.vgg19_bn(pretrained=False, **kwargs)[SOURCE] VGG 19-layer model (configuration ‘E') with batch normalization Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet RESNET torchvision.models.resnet18(pretrained=False, **kwargs)[SOURCE] Constructs a ResNet-18 model. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.resnet34(pretrained=False, **kwargs)[SOURCE] Constructs a ResNet-34 model. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.resnet50(pretrained=False, **kwargs)[SOURCE] Constructs a ResNet-50 model. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.resnet101(pretrained=False, **kwargs)[SOURCE] Constructs a ResNet-101 model. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.resnet152(pretrained=False, **kwargs)[SOURCE] Constructs a ResNet-152 model. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet SQUEEZENET torchvision.models.squeezenet1_0(pretrained=False, **kwargs)[SOURCE] SqueezeNet model architecture from the “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size” paper. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.squeezenet1_1(pretrained=False, **kwargs)[SOURCE] SqueezeNet 1.1 model from the official SqueezeNet repo. SqueezeNet 1.1 has 2.4x less computation and slightly fewer parameters than SqueezeNet 1.0, without sacrificing accuracy. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet DENSENET torchvision.models.densenet121(pretrained=False, **kwargs)[SOURCE] Densenet-121 model from “Densely Connected Convolutional Networks” Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.densenet169(pretrained=False, **kwargs)[SOURCE] Densenet-169 model from “Densely Connected Convolutional Networks” Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.densenet161(pretrained=False, **kwargs)[SOURCE] Densenet-161 model from “Densely Connected Convolutional Networks” Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet torchvision.models.densenet201(pretrained=False, **kwargs)[SOURCE] Densenet-201 model from “Densely Connected Convolutional Networks” Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet INCEPTION V3 torchvision.models.inception_v3(pretrained=False, **kwargs)[SOURCE] Inception v3 model architecture from “Rethinking the Inception Architecture for Computer Vision”. Parameters: pretrained (bool) – If True, returns a model pre-trained on ImageNet

以上就是Pytorch教程内置模型源码实现的详细内容,更多关于Pytorch内置模型的资料请关注hwidc其它相关文章!

【文章转自:韩国cn2服务器 转载请保留连接】